This post is part of the Unity daily release process blog post suite.

As part of the new Unity release procedure, let's first have look at the start of the story of a branch, how does it reach trunk?

The merge procedure

Starting the 12.04 development cycle, we needed upstream to be able to reliably and easily get their changes into trunk. To ensure that every commits in trunk pass some basic unit tests and doesn't break the build, that would obviously mean some automation would take place. Here comes the merger bot.

Proposing my branch for being merged, general workflow

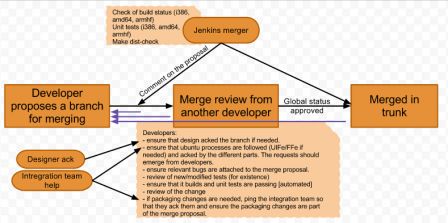

We require peer-review of every merge request on any project where we are upstream. No direct commit to trunk. It means that any code change will be validated by an human first. In addition to this, once the branch is approved, the current branch will be:

- built on most architectures (i386, amd64, armhf) in a clean environment (chroot with only the minimal dependencies)

- unit tests will be run (as part of the packaging setup) on those archs

Only if all this passes, the branch will be merged into trunk. This way, we know that trunk is at a high standard already.

You will notice in this example of a merge request that in advance of phase (thanks to the work of Martin Mrazik, Francis Ginther), a continuous integration job is kicking in to give some early feedback to both the developer and the reviewer. This can indicate if the branch is good for merging even before approving it. This job kicks back if additional commit is proposed as well. This rapid feedback loop helps to give an additional advice on the branch quality and direct link to a public jenkins instance to see the eventual issues during the build.

Once the global status of a merge request is set to "approved", the merger will validate the branch, then takes the commit message (and falling back to the description on some project as a commit message if nothing is set), will eventually take attached bug reports (that the developer attached manually to the merge proposal or directly in a commit with "bzr commit --fixes lp:<bugnumber>") and merge that to the mainline, as you can see here.

How to handle dependencies, new files shipped and similar items

We told in the previous section that the builds are done in a chroot, clean environnement. But we can have dependencies that are not released into the distribution yet. So how to handle those dependencies, detect them and taking the latest stack available?

For that, we are using our debian packages. As this is what the finale "product" will be delivered to our users, using packages here and similar tools that we are using for the Ubuntu distribution itself is a great help. This means that we have a local repository with the latest "trunk build" packages (appending "bzr<revision>" to the current Ubuntu package version) so that when it's building Unity, it will grab the latest (eventually locally built) Nux and Compiz.

Ok, we are using packages, but how to ensure that when I have a new requirement/dependency, or when I'm shipping a new file, the packaging will be in sync with this merge request? Previously and historically, the packaging branch was separated from upstream. This was mainly for 3 reasons:

- we don't really want to require that our upstream learns how to package and all the small details around this

- we don't want to be seen as distro-specific for our stack and be as any other upstream

- we the integration team wants to have the control over their packaging

This mostly worked in the sense that people had to ping the integration team just before setting a merge to "approve", and ensure no other merges was in process meanwhile (to not take the wrong packaging metadata with other branches). However, it's quite clear this can't scale at all. We did have some rejections because ensuring that we can be in sync was difficult.

So, we decided this cycle to have the packaging inlined with the upstream branch. This doesn't change anything for other distributions as "make dist" is used to create a tarball (or they can grab any tarball from launchpad from the daily release) and those don't contains the packaging infos. So we are not hurting them here. However, this ensures that we are in sync between what we will deliver the next day to Ubuntu and what upstream is setting into their code. This work started at the very beginning of the cycle and thanks to the excellent work of Michael Terry, Mathieu Trudel-Lapierre, Ken VanDine and Robert Bruce Park, we got that quickly in. Though, there were some exceptions where achieving this was really difficult, because of unit tests were not really in shape to work in isolation (meaning in a chroot, with mock objects like Xorg, dbus…). We are still working on getting the latest elements bootstrapped to this process and having those tests smoothly running. I clearly know this idea of using the packaging to build the upstream trunk and having this inlined is a drastic change, but from what we can see since October, we have pretty good results with this and it seems to have worked out quite well! I would like to thanks again the whole awesome product strategy (the canonical upstream) team to have let that idea going through and facilitate as much as possible this process. Thanks as well to the jenkins master (2 of them already announced previously, plus Allan LeSage and Victor R. Ruiz) to have completed all the jenkins/merger machinery changes that were needed on each project for that.

We can't expect that every upstream will know everything about the packaging, consequently the integration team is here and available for giving any help which is needed. I think on the long term that basic packaging changes will be directly done by upstream (we are already seeing some people bumping the build-dependency requirement themselves, adding a new file to install, declaring a new symbol as part of the library…). However, we have processes inside the distribution and only people with upload rights in Ubuntu is supposed to do or review the changes. How does this work with this process? Also we have some feature freeze and other Ubuntu processes, how will we ensure that upstream are not breaking those rules?

Merge guidelines

As you can see in the first diagram, some requirements during a merge request, both controlled by the acceptance criterias and the new conditions from inline packaging, are set:

- Design needs to acknowledge the change if there is any visual change involved

- Both the developer, reviewer and the integration team ensure that ubuntu processes are followed (UI Freeze/Feature Freeze for instance). If exceptions are required, they check before approving for merging that they are acknowledged by different parts. The integration team can help smooth to have this happened, but the requests should emerge from developers.

- Relevant bugs are attached to the merge proposal. This is useful for tracking what changed and for generating the changelog as we'll see in the next part

- Review of new/modified tests (for existence)

- They ensure that it builds and unit tests are passing automated

- Another important one, especially when you refactor, is to ensure that integration tests are still passing

- Review of the change itself by another peer contributor

- If the change seems important enough to have a bug report linked, ensure that the merge request is linked to a bug report (and you will get all the praise in debian/changelog as well!)

- If packaging changes are needed, ping the integration team so that they acknowledge them and ensure the packaging changes are part of the merge proposal.

The integration team, in addition to be ready to help on request by any developer of our upstream, is having an active monitoring role over everything that is merged upstream. Everyone has some part of the whole stack attributed under his responsibility and will spot/start some discussion as needed if we are under the impression that some of those criterias are not met. If something not following those guidelines are confirmed to be spotted, anyone can do a revert by simply proposing another merge.

This enables to mainly answer the 2 other fear of what inline packaging may interfere by giving upstream control over the packaging. But this is the first safety net, we have a second one involved as soon as there is a packaging change since last daily release that we'll discuss in the next part.

Consequences for other Ubuntu maintainers

Even if we have our own area of expertise, Ubuntu maintainers can touch any part of what constitutes the distribution (MOTUs on universe/multiverse and core developers on anything). On that purpose, we didn't want daily release and inline packaging to change anything for them.

We added some warning on debian/control, pointing the Vcs-Bzr to the upstream branch with a comment above, this should highlight that any packaging change (if not urgent) needs to be a merge proposal against the upstream branch, as if we were going to change any of the upstream code. This is how the integration team is handling transitions as well, as any developer.

However, it happens that sometimes, an upload needs to be done in a short period of time, and we can't wait for next daily. If we can't even wait for manually triggering a daily release, the other option is to directly upload to Ubuntu, as we normally do for other pieces where we are not upstream for and still propose a branch for merging to the upstream trunk including those changes. If the "merge back" is is not done, the next daily release for that component will be paused, as it's detecting that there are newer changes in the distro, and the integration team will take care of backporting the change to the upstream trunk (as we are monitor as well uploads to the distributions).

Of course, the best is always to consult any person of the integration team first in case of any doubt. :)

Side note on another positive effects of inline packages

Having to inline all packages was a hard and long work, however in addition to the previous benefits hilighted, this enabled us to standardize all ~60 packages from our internal upstream around best practices. They should now all look familar once you have touch any of them. Indeed they all use debhelper 9, --fail-missing to ensure we ship all installed files, symbol files for C libraries using -c4 to ensure we force updating them, running autoreconf, debian/copyright using latest standard, split packages… In addition to be easier for us, it's as well easier for upstream as they are in a familar environment if they need to do themselves any changes and they can just use "bzr bd" to build any of them.

Also, we were able to remove and align more the distro patch we had on those components to push them upstream.

Conclusion on an upstream branch flow

So, you should now know everything on how upstream and packaging changes are integrated to the upstream branch with this new process, why we did it this way and what benefits we immediately can get from those. I guess having the same workflow for packaging and upstream changes is a net benefit and we can ensure that what we are delivering between those 2 are coherent, of a higher quality standard, and in control. This is what I would keep in mind if I would have only one thing to remember from all this :). Finally, we are taking into account the case of other maintainers needing to make any change to those components in Ubuntu and try to be flexible in our process for them.

Next part will discuss what happens to validate one particular stack and uploading that to the distribution on a daily basis. Keep in touch!