We are starting to see multiple awesome code contributions and suggestions on our Ubuntu Loves Developers effort and we are eagerly waiting on yours! As a consequence, the spectrum of supported tools is going to expand quickly and we need to ensure that all those different targeted developers are well supported, on multiple releases, always delivering the latest version of those environments, at anytime.

A huge task that we can only support thanks to a large suite of tests! Here are some details on what we currently have in place to achieve and ensure this level of quality.

Different kinds of tests

pep8 test

The pep8 test is there to ensure code quality and consistency checking. Tests results are trivial to interpret.

This test is running on every commit to master, on each release during package build as well as every couple of hours on jenkins.

small tests

Those are basically unit tests. They are enabling us to quickly see if we've broken anything with a change, or if the distribution itself broke us. We try to cover in particular multiple corner cases that are easy to test that way.

They are running on every commit to master, on each release during package build, every time a dependency is changed in Ubuntu thanks to autopkgtests and every couple of hours on jenkins.

large tests

Large tests are real user-based testing. We execute udtc and type in stdin various scenarios (like installing, reinstalling, removing, installing with a different path, aborting, ensuring the IDE can start…) and check that the resulting behavior is the one we are expecting.

Those tests enables us to know if something in the distribution broke us, or if a website changed its layout, the download links are modified, or if a newer version of a framework can't be launched on a particular Ubuntu version or configuration. That way, we are aware, ideally most of the time even before the user, that something is broken and can act on it.

Those tests are running every couple of hours on jenkins, using real virtual machines running an Ubuntu Desktop install.

medium tests

Finally, the medium tests are inheriting from the large tests. Thus, they are running exactly the same suite of tests, but in a Docker containerized environment, with mock and small assets, not relying on the network or any archives. This means that we ship and emulate a webserver delivering web pages to the container, pretending we are, for instance, https://developer.android.com. We then deliver fake requirements packages and mock tarballs to udtc, and running those.

Implementing a medium tests is generally really easy, for instance:

class BasicCLIInContainer(ContainerTests, test_basics_cli.BasicCLI):

"""This will test the basic cli command class inside a container"""

is enough. That means "takes all the BasicCLI large tests, and run them inside a container". All the hard work, wrapping, sshing and tests are done for you. Just simply implement your large tests and they will be able to run inside the container with this inheritance!

We added as well more complex use cases, like emulating a corrupted downloading, with a md5 checksum mismatch. We generate this controlled environment and share it using trusted containers from Docker Hub that we generate from the Ubuntu Developer Tools Center DockerFile.

Those tests are running as well every couple of hours on jenkins.

By comparing medium and large tests, as the first is in a completely controlled environment, we can decipher if we or the distribution broke us, or if a change from a third-party changing their website or requesting newer version requirements impacted us (as the failure will only occurs on the large tests and not in the medium for instance).

Running all tests, continuously!

As some of the tests can show the impact of external parts, being the distribution, or even, websites (as we parse some download links), we need to run all those tests regularly[1]. Note as well that we can experience different results on various configurations. That's why we are running all those tests every couple of hours, once using the system installed tests, and then, with the tip of master. Those are running on various virtual machines (like here, 14.04 LTS on i386 and amd64).

By comparing all this data, we know if a new commit introduced regressions, if a third-party broke and we need to fix or adapt to it. Each testsuites has a bunch of artifacts attached to be able to inspect the dependencies installed, the exact version of UDTC tested here, and ensure we don't corner ourself with subtleties like "it works in trunk, but is broken once installed".

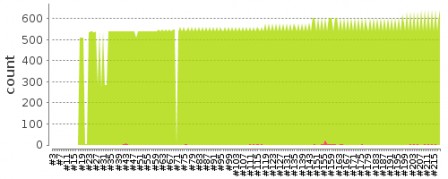

You can see on that graph that trunk has more tests (and features… just wait for some days before we tell more about them ;)) than latest released version.

As metrics are key, we collect code coverage and line metrics on each configuration to ensure we are not regressing in our target of keeping high coverage. That tracks as well various stats like number of lines of code.

Conclusion

Thanks to all this, we'll probably know even before any of you if anything is suddenly broken and put actions in place to quickly deliver a fix. With each new kind of breakage we plan to back it up with a new suite of tests to ensure we never see the same regression again.

As you can see, we are pretty hardcore on tests and believe it's the only way to keep quality and a sustainable system. With all that in place, as a developer, you should just have to enjoy your productive environment and don't have to bother of the operation system itself. We have you covered!

As always, you can reach me on G+, #ubuntu-desktop (didrocks) on IRC (freenode), or sending any issue or even pull requests against the Ubuntu Developer Tools Center project!